As neural networks grow in influence and capability, understanding the mechanisms behind their decisions remains a fundamental scientific challenge. This gap between performance and understanding limits our ability to predict model behavior, ensure reliability, and detect sophisticated adversarial or deceptive behavior. Many of the deepest scientific mysteries in machine learning may remain out of reach if we cannot look inside the black box.

Mechanistic interpretability addresses this challenge by developing principled methods to analyze and understand a model’s internals–weights and activations–and to use this understanding to gain greater insight into its behavior, and the computation underlying it.

The field has grown rapidly, with sizable communities in academia, industry and independent research, 140+ papers submitted to our ICML 2024 workshop, dedicated startups, and a rich ecosystem of tools and techniques. This workshop aims to bring together diverse perspectives from the community to discuss recent advances, build common understanding and chart future directions.

See our Call for Papers for submission details and topics of interest.

Keynote Speakers

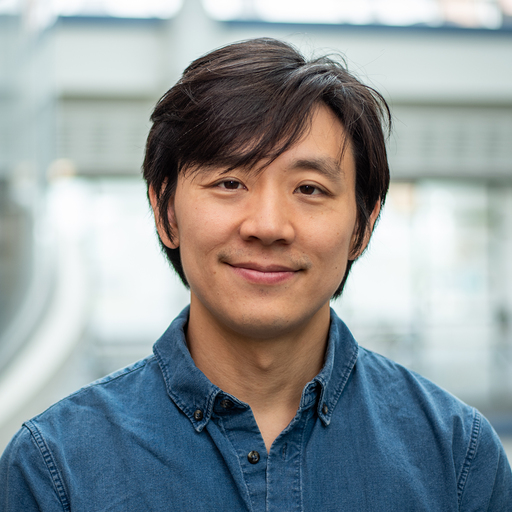

Chris Olah

Interpretability Lead and Co-founder, Anthropic

Been Kim

Senior Staff Research Scientist, Google DeepMind

Sarah Schwettmann

Co-founder, Transluce

The first Mechanistic Interpretability Workshop (ICML 2024).

Organizing Committee

Neel Nanda

Senior Research Scientist, Google DeepMind

Andrew Lee

Post-doc, Harvard

Andy Arditi

PhD Student, Northeastern University

Jemima Jones

Operations Lead

Stefan Heimersheim

Member of Technical Staff, FAR.AI

Anna Soligo

PhD Student, Imperial

Martin Wattenberg

Professor, Harvard University & Principal Research Scientist, Google DeepMind

Atticus Geiger

Lead, Pr(Ai)²R Group

Julius Adebayo

Founder and Researcher, Guide Labs

Kayo Yin

3rd year PhD student, UC Berkeley

Fazl Barez

Senior Research Fellow, Oxford Martin AI Governance Initiative

Lawrence Chan

Researcher, METR

Matthew Wearden

London Director, MATS

Questions? Email neurips2025@mechinterpworkshop.com

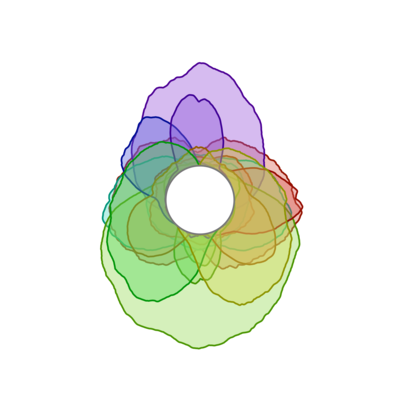

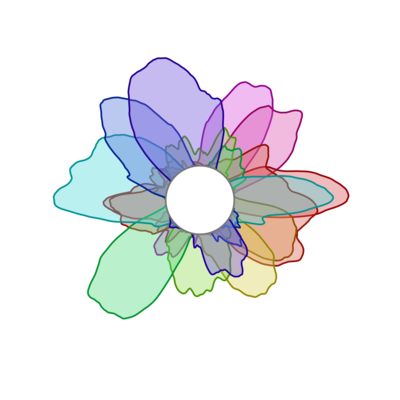

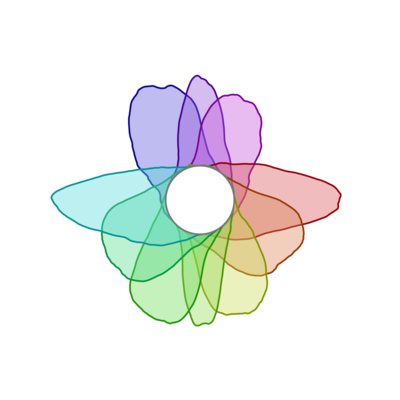

What are those beautiful rainbow flower things?

These are visualizations of "curve detector" neurons from early mechanistic interpretability research. Learn more in the Curve Detectors article on Distill.